Develop With Containers

Develop containerized applications

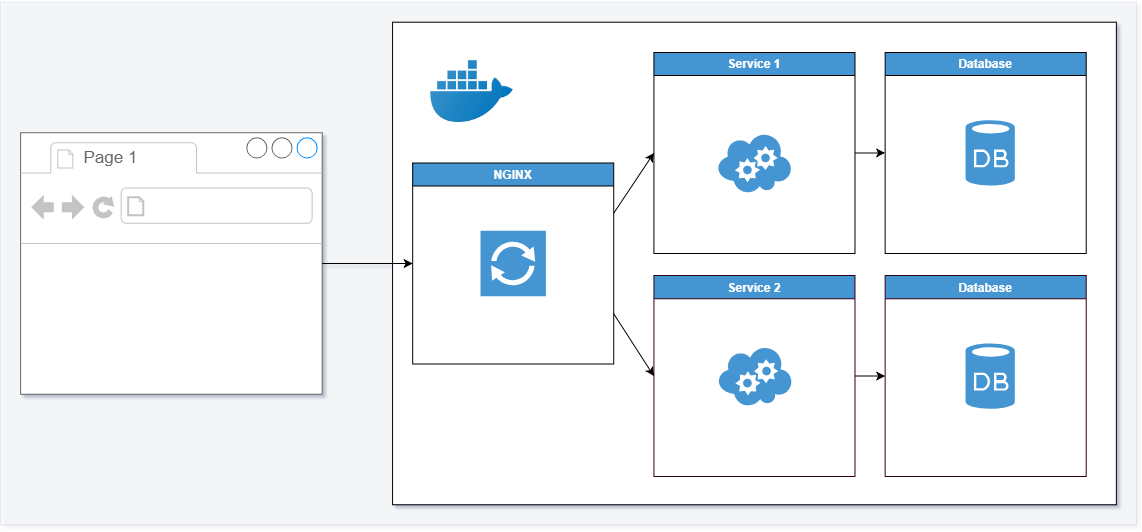

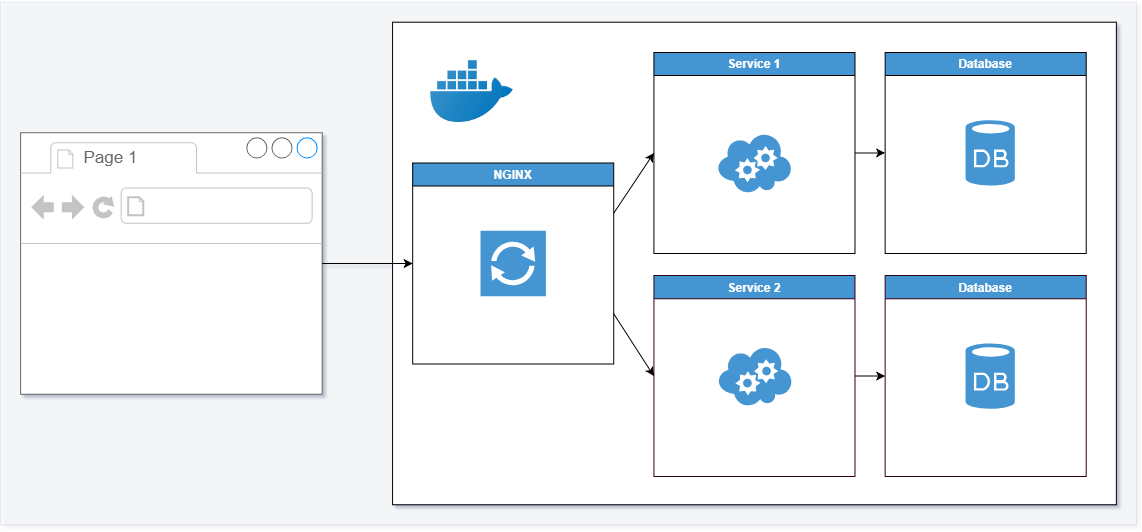

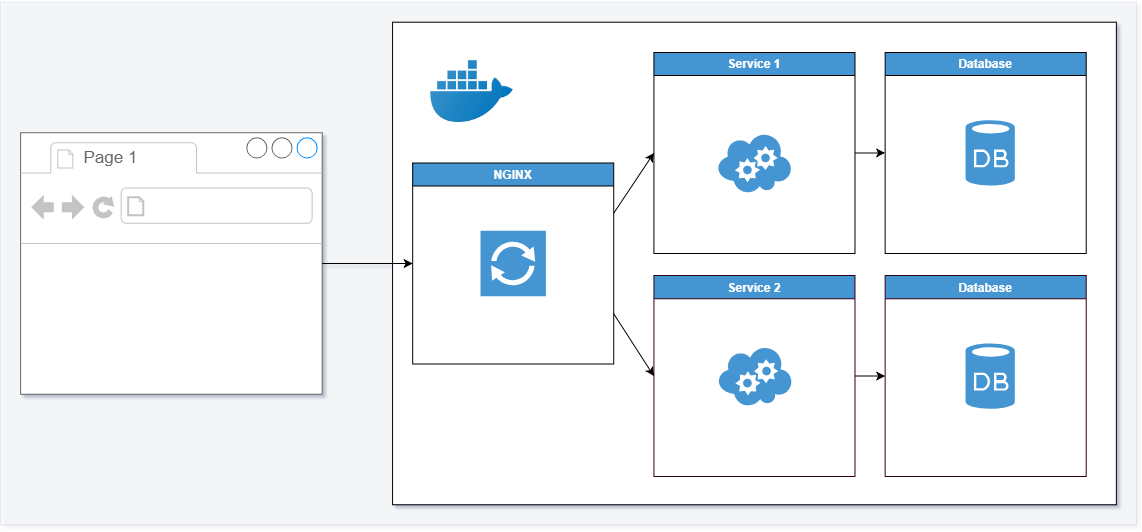

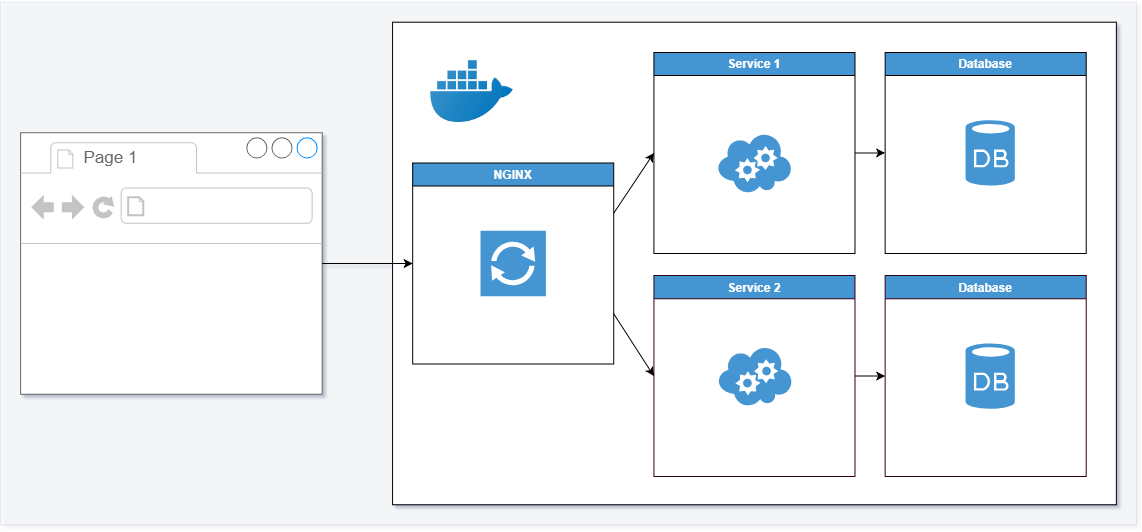

When using docker for developing a microsoervice architecture, than the easiest way to let them run on a development machine is by putting all pieces into docker containers

and let the debugger debug into the docker container.

This can slow down your development process as a change in the application can result in a compile, build image, start container process.

And the classic way of comiple and run in the IDE tend to be a lot faster.

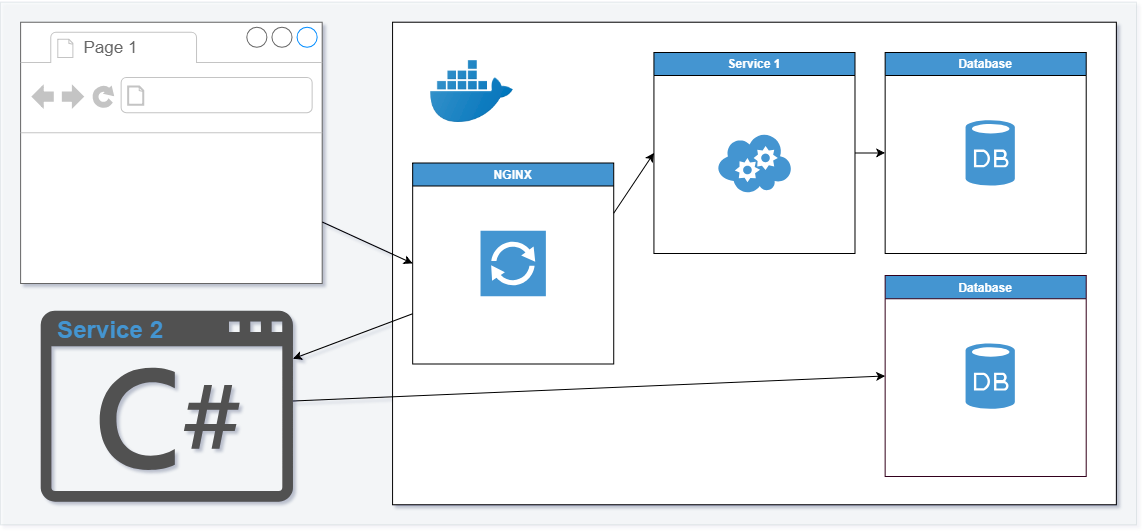

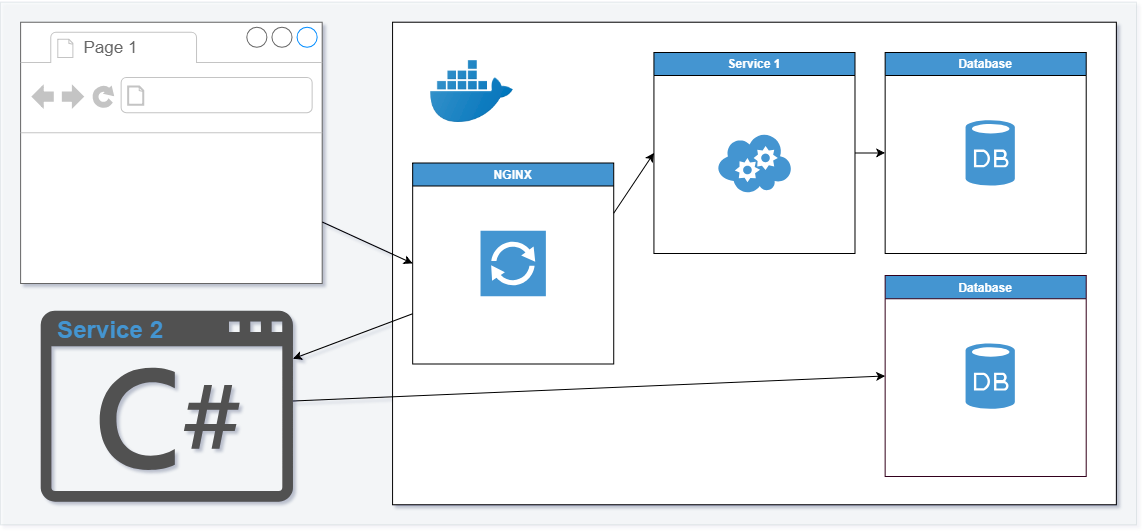

So I came up with a solution that our nginx reverse proxy does not send the request to the containers, that I am working on, but to the host.

And all containers I am not working on are running as containers.

In my example, I have a simple .NET core REST-API as backend server I am working on, an Angular application as frontend I am working on and another backend service which is

just used as it is.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

|

version: "3.6"

services:

nginx:

image: nginx:1.15.7-alpine

ports:

- 8081:80

networks:

- backend

volumes:

- type: bind

source: ./config/nginx.conf

target: /etc/nginx/nginx.conf

command: [nginx-debug, '-g', 'daemon off;']

service1:

image: myapp1

ports:

- 5000:5000

networks:

- backend

service2:

image: myapp2

ports:

- 5001:5000

networks:

- backend

networks:

backend:

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

|

# HOST NGINX CONF

user nginx;

worker_processes 1;

error_log /var/log/nginx/error.log warn;

pid /var/run/nginx.pid;

events {

worker_connections 1024;

}

http {

include /etc/nginx/mime.types;

default_type application/octet-stream;

log_format main '$remote_addr - $remote_user [$time_local] "$request" '

'$status $body_bytes_sent "$http_referer" '

'"$http_user_agent" "$http_x_forwarded_for"';

access_log /var/log/nginx/access.log main;

sendfile on;

keepalive_timeout 65;

server {

listen 80;

location /service1/ {

proxy_set_header Host $host;

proxy_set_header X-Real-IP $remote_addr;

proxy_pass http://service1:5000;

}

location /service2/ {

proxy_set_header Host $host;

proxy_set_header X-Real-IP $remote_addr;

proxy_pass http://service2:5001;

}

}

}

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

|

# HOST NGINX CONF

user nginx;

worker_processes 1;

error_log /var/log/nginx/error.log warn;

pid /var/run/nginx.pid;

events {

worker_connections 1024;

}

http {

include /etc/nginx/mime.types;

default_type application/octet-stream;

log_format main '$remote_addr - $remote_user [$time_local] "$request" '

'$status $body_bytes_sent "$http_referer" '

'"$http_user_agent" "$http_x_forwarded_for"';

access_log /var/log/nginx/access.log main;

sendfile on;

keepalive_timeout 65;

server {

listen 80;

location /service1/ {

proxy_set_header Host $host;

proxy_set_header X-Real-IP $remote_addr;

proxy_pass http://service1:5000;

}

location /service2/ {

proxy_set_header Host $host;

proxy_set_header X-Real-IP $remote_addr;

proxy_pass http://host.docker.internal:5001;

}

}

}

|